The AI Revolution Accelerates: From Open-Source GPT-4o Rivals to Higher Emotional Intelligence

The world of artificial intelligence moves at a velocity that often feels impossible to track. Just when we think we’ve reached a plateau of innovation, a single week brings a deluge of updates that reshape our understanding of what machines can achieve. This past week was particularly "insane," marked by the release of powerful open-source multimodal models, significant updates from tech giants like Google and Microsoft, and the long-awaited debut of Anthropic’s Claude 4. We are witnessing a shift from AI as a simple chatbot to AI as an autonomous agent, a creative director, and even a medical consultant.

From ByteDance’s "Bagel"—an open-source challenger to GPT-4o—to studies suggesting that AI now possesses a higher emotional intelligence (EQ) than the average human, the implications are profound. Whether you are a developer looking for the latest coding agents, a creative exploring the boundaries of image generation, or a professional worried about the automation of your industry, the landscape has fundamentally shifted. In this comprehensive guide, we will break down the most significant breakthroughs of the week, exploring the technical nuances, performance benchmarks, and the real-world impact of these rapidly evolving tools.

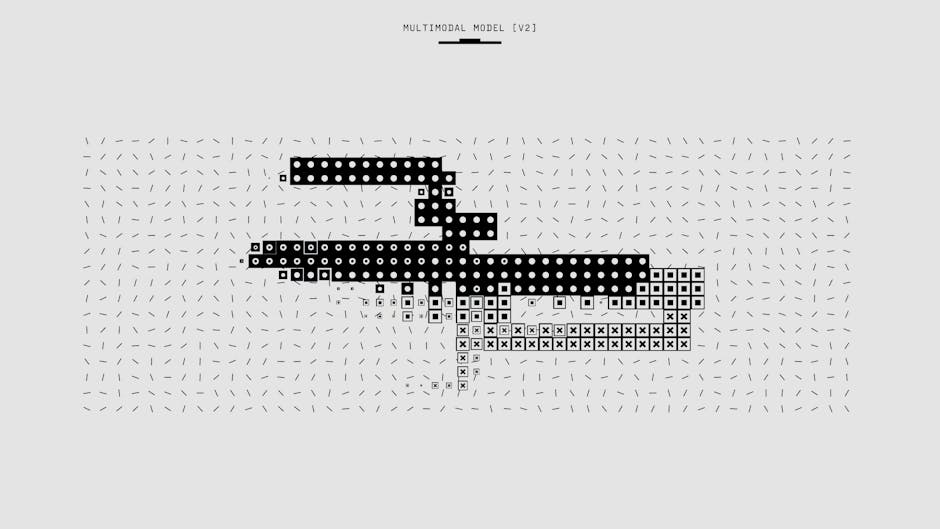

1. The Open-Source Multimodal Frontier: Bagel and MTV Crafter

One of the most exciting developments this week came from ByteDance with the release of Bagel. While closed-source models like GPT-4o have dominated the conversation regarding multimodal capabilities, Bagel represents a massive win for the open-source community. It isn't just a text model; it is a visual powerhouse capable of understanding, generating, and editing images with startling precision.

Bagel: The GPT-4o Challenger

Bagel is a multimodal language model that functions much like a Swiss Army knife for visual tasks. Unlike traditional image generators that operate in a vacuum, Bagel possesses "visual understanding." This means you can upload a blurry, historical photograph and ask the model to identify specific individuals based on captions or context clues. In one demonstration, the model correctly identified "Mr. Fowler" in a low-quality image, describing his attire and position relative to others in the frame.

Beyond identification, Bagel excels at complex reasoning within images. It can solve mathematical equations written on a chalkboard, provide recipes for a photographed dish, or summarize the plot of a movie based on a single poster. What truly sets it apart, however, is its generative capability. It handles text rendering—a notorious weakness for older AI models—with ease. Whether it’s labeling magic potions "SDXL," "Bagel," and "Flux" or generating a 3D animation frame-by-frame, Bagel demonstrates a level of prompt adherence that rivals the best proprietary models.

Advanced Image Editing and Style Transfer

Bagel’s "Thinking" feature—reminiscent of DeepSeek R1—allows it to ponder a request before executing it. This is particularly useful for complex image editing. For example, a user can upload a photo of a woman and prompt the AI to make her "hold a rabbit and stroke it tenderly." The model doesn't just overlay a rabbit; it understands the anatomy and lighting, maintaining consistency with the original image’s clothing and environment.

The model also supports:

- Virtual Try-Ons: Swapping clothes on a model while keeping the original garment’s logo and texture intact.

- Object Removal: Seamlessly removing watermarks or unwanted background elements.

- Style Transfer: Converting real photos into Japanese anime, Ghibli style, 3D animations, or even "claymation" and "jelly cat" plush toy aesthetics.

- Camera Simulation: Generating a series of images that simulate moving a camera through a scene, effectively creating a "Street View" experience from a single static image.

MTV Crafter: Motion Transfer Made Simple

While Bagel handles static imagery, MTV Crafter is pushing the boundaries of character animation. This tool allows users to take a reference photo of any character and a reference video of a person moving, then map those movements onto the character.

The technical architecture involves a 3D motion data converter and a 4D motion tokenizer. These tokens are fed through a motion-aware video model to ensure the character’s movements remain fluid and anatomically plausible. While the current output quality may not yet reach the cinematic levels of Alibaba’s VASA, the fact that it is open-source means the developer community can iterate and improve upon it rapidly.

2. The Intelligence Gap: EQ and Reasoning Benchmarks

Perhaps the most controversial and fascinating news this week involves a study comparing the emotional intelligence of AI models to humans. For years, the "human touch" was considered the final fortress against automation. That fortress may be crumbling.

AI’s Surprising Emotional Intelligence

A recent study tested leading AI models—including GPT-4o, Gemini 1.5 Flash, and Claude 3.5 Haiku—on standard EQ tests. The results were staggering: the AI models achieved an average score of 81, while human participants averaged only 56.

This doesn't necessarily mean AI "feels" emotions, but it is significantly better at identifying and simulating emotionally intelligent responses. The AI excelled at choosing the most empathetic and constructive answers in conflict resolution scenarios. This suggests that AI could soon outperform humans in roles such as:

- Coaching and Mentoring: Providing objective, emotionally regulated guidance.

- Therapy and Mental Health Support: Offering instant, high-EQ interactions at a fraction of the cost of human specialists.

- Conflict Resolution: Acting as a neutral, de-escalating mediator in corporate or personal disputes.

Uni-VGR1: Mastering Visual Analysis through Chain of Thought

While some models focus on emotion, Uni-VGR1 focuses on pure visual logic. Built on Alibaba’s Qwen2-VL, this model uses a two-stage fine-tuning process to achieve state-of-the-art visual reasoning.

- Stage One (SFT): The model is fed "Chain of Thought" (CoT) examples, teaching it to break down visual problems into logical steps.

- Stage Two (RL): It undergoes Reinforcement Learning, where it is rewarded for correct answers, further refining its "policy" for solving puzzles.

In practical tests, Uni-VGR1 can identify common objects across multiple disparate images (like finding a zebra in a crowded scene) or reason through abstract requests. If asked to find an object that reflects a "creative girl's characteristics," the model "thinks" through the prompt and identifies a drawing as the most logical answer—a task where other vision models frequently fail.

3. Google I/O: The "End of the Creator" as We Know It?

Google’s I/O event was a tour de force of AI integration. While the announcement of Veo3 (video generation) and Imagen 4 (image generation) made headlines, it was the updates to NotebookLM that truly shocked the creative community.

The Rise of Video Overviews

Google’s NotebookLM has long been a favorite for researchers, allowing users to upload documents and receive summaries or "Audio Overviews" (AI-generated podcasts). However, Google has now previewed Video Overviews.

This feature allows a user to upload a document, a website, or even a YouTube link, and the AI will generate a full, high-quality explainer video featuring AI hosts who discuss the content dynamically. As the transcript mentions, this technology is so effective that it poses an existential threat to educational content creators. If an AI can turn a dry technical manual into an engaging, visual presentation in minutes, the traditional "explainer video" industry may be fundamentally disrupted.

Med-Gemma: AI in the Exam Room

Google also released Med-Gemma, a specialized suite of models designed for medical analysis.

- The 4B Model: A multimodal small language model (SLM) that can analyze X-rays, CT scans, and pathology slides. It can identify lung infections, tumors, and pleural effusions, explaining the findings in layperson’s terms for patients or technical terms for doctors.

- The 27B Model: A text-only model optimized for clinical decision support and medical summarization.

Because these are open-source and relatively small, they can be run on consumer-grade hardware or edge devices in clinics, ensuring patient data remains offline and secure. This democratizes high-level medical diagnostic assistance, potentially saving lives in regions with a shortage of specialized radiologists.

LearnLM and Interactive Education

Furthering their push into education, Google introduced LearnLM, a model fine-tuned on educational best practices. Unlike a standard chatbot that simply gives an answer, LearnLM acts as a "Learning Coach." It creates structured learning plans, asks follow-up questions to test comprehension, and provides interactive quizzes. This "Socratic" approach to AI ensures that students are actually learning the material rather than just using the AI to do their homework.

4. Claude 4: The New King of Coding?

After months of relative silence, Anthropic released Claude 4, featuring two variants: Opus (the heavy hitter) and Sonnet (the fast, everyday model). The focus here is clear: software engineering.

The Power of Extended Thinking

Claude 4 introduces a "Hybrid Reasoning System." Users can opt for instant responses or toggle on "Extended Thinking" for complex tasks. This is particularly effective for coding, where the model needs to map out dependencies across multiple files.

In the SUI Bench (a software engineering benchmark), Claude 4 with thinking enabled outperformed both OpenAI’s o3 and Google’s Gemini 2.5 Pro. This makes it an incredibly attractive tool for developers working on large-scale codebases. However, this intelligence comes at a steep price. Claude 4 Opus is currently one of the most expensive models on the market, costing roughly $30 per million tokens. For many developers, the marginal gains in coding accuracy may not justify the significant increase in API costs compared to more affordable models like DeepSeek or Gemini.

Benchmarking the Reality

While Claude 4 excels in coding, independent evaluators like LiveBench and Artificial Analysis suggest it may not be the "GPT-5 moment" some were hoping for. In graduate-level reasoning and visual tasks, it often trails behind OpenAI’s o3 and even Google’s latest models. Furthermore, its closed-source nature and high pricing put it in a difficult position against the rising tide of highly capable, cheaper open-source models.

5. Microsoft’s Autonomous Agents and the Future of Work

Not to be outdone, Microsoft’s Build event introduced tools that move AI from "assistant" to "agent." The distinction is subtle but vital: an assistant helps you do the work; an agent does the work for you.

NL Web and the Model-Agnostic Chatbot

Microsoft released NL Web, an open-source framework that allows website owners to add AI-powered chat interfaces to their sites for free. What makes NL Web unique is that it is model-agnostic. You aren't locked into Microsoft’s ecosystem; you can plug in GPT-4o, Gemini, or even a local Llama model.

It also supports MCP (Model Context Protocol), allowing the chatbot to pull data from external apps like Notion, MongoDB, or Slack. This means a customer could ask a website's chatbot, "Is there a geeky event in Seattle this weekend?" and the AI will search the site's database, check the user's preferences, and provide a personalized recommendation.

GitHub Copilot: The Autonomous Coding Agent

The most significant update for developers is the new GitHub Copilot Coding Agent. Unlike the standard Copilot, which suggests lines of code as you type, the Agent can handle entire feature requests autonomously.

Imagine you need to implement a new authentication system. You can give the request to the agent, and it will work in the background—editing multiple files, running tests, and fixing its own bugs. When it’s finished, it presents you with a Pull Request (PR). Your job shifts from "writer" to "editor," reviewing the AI's work and merging it into the main branch. This mirrors the functionality of Google’s "Jules" and OpenAI’s "Codex," signaling a massive shift in how software will be built in the coming years.

Conclusion: Navigating the AI Deluge

The sheer volume of AI advancements this week is a testament to the fact that we are in the "scaling" phase of the AI revolution. We are no longer just seeing better chatbots; we are seeing the emergence of specialized agents that can diagnose diseases, teach complex physics, and write entire software modules with minimal human intervention.

Key Takeaways:

- Open-Source is Catching Up: Models like Bagel and Med-Gemma prove that high-end multimodal capabilities are no longer the exclusive domain of Big Tech.

- The Rise of the Agent: From Google’s Video Overviews to Microsoft’s Coding Agents, the trend is moving toward autonomy. AI is increasingly capable of executing multi-step tasks in the background.

- EQ is the New Frontier: AI’s ability to outperform humans in emotional intelligence tests suggests a future where machines play a central role in mental health and interpersonal coaching.

- Coding is the Primary Battleground: With Claude 4, o3, and Gemini 2.5 Pro all vying for the "best coder" title, developers are the biggest winners of this competition.

As these tools become more accessible, the barrier to entry for complex tasks—be it video production, medical analysis, or software engineering—continues to drop. The challenge for us as humans is no longer just "how to use" these tools, but how to remain relevant in a world where the AI might just be more "intelligent" than us in almost every measurable way. Stay curious, keep testing, and embrace the agentic future.

0 Comments